Information Theoretic Quantities

Contents

Information Theoretic Quantities¶

The following presents key concepts of Information Theory that will be used later to train generative models

How can we measure information?¶

Information Theory is the mathematical study of the quantification and transmission of information proposed by Claude Shannon on this seminal work: A Mathematical Theory of Communication, 1948

Shannon considered the output of a noisy source as a random variable \(X\)

The RV takes \(M\) possible values \(\mathcal{A} = \{x_1, x_2, x_3, \ldots, x_M\}\)

Each value \(x_i\) have an associated probability \(P(X=x_i) = p_i\)

Consider the following question: What is the amount of information carried by \(x_i\)?

Shannon defined the amount of information as

which is measured in bits

Note

One bit is the amount of information needed to choose between two equiprobable states

Example: A meteorological station in 1920 that sends tomorrow’s weather prediction from Niebla to Valdivia via telegraph

Tomorrows weather is a random variable

The dictionary of messages: (1) Rainy, (2) Cloudy, (3) Partially cloudy, (4) Sunny

Assume thta their probabilities are: \(p_1=1/2\), \(p_2=1/4\), \(p_3=1/8\), \(p_4=1/8\)

What is the minimum number of yes/no questions (equiprobable) needed to guess tomorrow’s weather?

Is it going to rain?

No: Is it going to be cloudy?

No: Is it going to be sunny?

What is then the amount amount of information of each message?

Rainy: \(\log_2 \frac{1}{p_1} = \log_2 2 = 1\) bits

Cloudy: \(2\) bits

Partially cloudy and Sunny: \(3\) bits

Important

The larger the probability the smallest information it carries

Shannon’s entropy¶

After defining the amount of information for a state Shannon’s defined the average information of the source \(X\) as

and called it the entropy of the source

Note

Entropy is the “average information of the source”

Properties:

Entropy is nonnegative: \(H(X)>0\)

Entropy is equal to zero when \(p_j = 1 \wedge p_i = 0, i \neq j\)

Entropy is maximum when \(X\) is uniformly distributed \(p_i = \frac{1}{M}\), \(H(X) = \log_2(M)\)

Note

The more random the source is the larger its entropy

For continuous variables the Differential entropy is defined as

where \(p(x)\) is the probability density function (pdf) of \(X\)

Relative Entropy: Kullback-Leibler (KL) divergence¶

Consider a continuous random variable \(X\) and two distributions \(q(x)\) and \(p(x)\) defined on its probability space

The relative entropy between these distributions is

which is also known as the Kullback- Leibler divergence

The left hand side term is the negative entropy of \(p(x)\)

The right hand side term is called the cross-entropy of \(q(x)\) relative to \(p(x)\)

Intepretations of KL

Coding: Expected number of “extra bits” needed to code \(p(x)\) using a code optimal for \(q(x)\)

Bayesian modeling: Amount of information lost when \(q(x)\) is used as a model for \(p(x)\)

Property: Non-negativity

The KL divergence is non-negative

with the equality holding for \(p(x) \equiv q(x)\)

This is given by the Gibbs inequality

Note

The entropy of \(p(x)\) is equal or less than the cross-entropy of \(q(x)\) relative to \(p(x)\)

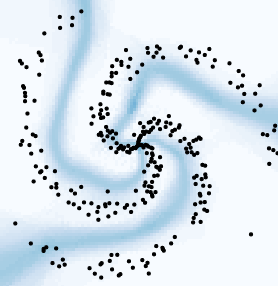

Property: Asymmetry

The KL divergence is asymmetric

The KL is not a proper distance (no triangle inequility either)

Forward and Reverse KL have different meanings (we will explore them soon)

Property: Relation with mutual information

The KL is related to the mutual information between random variables as

See also

See D. Mackays’ book chapter 1 for more details on Information Theory