Bayesian Linear Regression

Contents

import holoviews as hv

hv.extension('bokeh')

import jax.numpy as jnp

import jax.random as random

import numpyro.distributions as dists

Bayesian Linear Regression¶

In linear regression we have a

continuous D-dimensional input \(x\)

continuous one-dimensional target \(y\)

related by a linear mapping

The model is specified by \(\theta=(b, w_1, w_2, \ldots, w_D)\)

Least squares solution¶

Typically, we fit this model by minimizing the squared errors

or in matrix form

where

The solution of the linear system of equations in matrix form is

i.e the famous ordinary least squares (OLS) solution

Note

The linear regression model is linear on \(\theta\). If we apply a non-linear transformation on \(x\) the solution for \(\theta\) does not change, e.g. polynomial and trigonometric regression. The only difference will be in \(\Phi\).

Probabilistic linear regression¶

We model our observations as an additive combination of the linear modelo and noise

Assumming that the noise is iid and Gaussian distributed with variance \(\sigma_\epsilon^2\) then

Additionally, we may want to discourage large values of \(\theta~\) by placing a prior

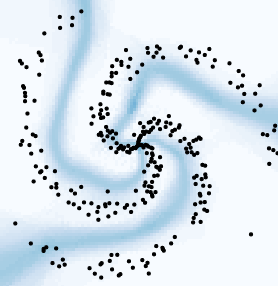

The prior on the parameters gives us the space of possible models before presenting data

x_test = jnp.linspace(-5, 5, num=100)

key = random.PRNGKey(1234)

prior = dists.MultivariateNormal(jnp.zeros((2,)), 5*jnp.eye(2))

theta_sample = prior.rsample(key, sample_shape=(100,))

predictive = []

for (b, w) in theta_sample:

predictive.append(x_test*w + b)

plots = []

for line in predictive:

plots.append(hv.Curve((x_test, line)))

hv.Overlay(plots).opts(hv.opts.Curve(color='#30a2da', width=500, alpha=0.1))

The lines corresponds to samples from the prior predictive distribution

We can evaluate the models arising from these parameter combinations on the test data

Point-estimate solution (MAP)¶

The space of feasible solutions is constrained by by presenting data

Considering the previous setting and a dataset \(\mathcal{D} = \{ (x_1, y_1), (x_2, y_2), \ldots, (x_N, y_N) \}\), the Maximum a posteriori estimator of \(\theta~\) is given by

the result is

where \(\lambda = \sigma_\epsilon^2 \Sigma_\theta^{-1}\)

Note

This is the ridge regression or regularized least squares solution

What happens if the variance of the prior tends to infinity?

Bayesian solution for the parameters¶

In this case we want the posterior of \(\theta~\) given the dataset

Assuming that we know \(\sigma_\epsilon\) we can write

The likelihood is gaussian and the prior is gaussian, so

and (with a bit of algebra) it can be shown that this corresponds to a gaussian distribution (gaussian is conjugate to itself)

with parameters

Iterative framework: We can present data and update the distribution of \(\theta~\)

Example: Fitting a line

We assume a zero-mean and diagonal covariance gaussian prior

mb, mw = 0., 0.

sb, sw = 5., 5.

S_theta = jnp.diag(jnp.array([sb**2, sw**2]))

mu_theta = jnp.array([mb, mw])

seps = 1. # What happens if this is larger/smaller?

key = random.PRNGKey(1234)

theta_sample = dists.MultivariateNormal(mu_theta, S_theta).rsample(key, sample_shape=(100,))

distb = hv.Distribution(theta_sample[:, 0], 'b').opts(height=125)

distw = hv.Distribution(theta_sample[:, 1], 'w').opts(width=125)

hv.Bivariate(theta_sample, kdims=['b', 'w']).opts(cmap='Blues', line_width=0, filled=True, width=350) << distw << distb

We observe data at \(x=2\), \(y=2\) and compute the posterior

def update_parameters(S_current, mu_current, x, y, seps):

Phi = jnp.array([[1.0, x]])

y = jnp.array([y])

S_updated = seps**2*jnp.linalg.inv(jnp.dot(Phi.T, Phi) + seps**2*jnp.linalg.inv(S_current))

mu_updated = jnp.dot(S_updated, jnp.linalg.solve(S_current, mu_current))

mu_updated += jnp.dot(S_updated, jnp.dot(Phi.T, y))/seps**2

return S_updated, mu_updated

S_theta, mu_theta = update_parameters(S_theta, mu_theta, 2., 2., seps)

display(S_theta, mu_theta)

key, subkey = random.split(key)

theta_sample = dists.MultivariateNormal(mu_theta, S_theta).rsample(key, sample_shape=(100,))

DeviceArray([[20.039701 , -9.920645 ],

[-9.920645 , 5.1587353]], dtype=float32)

DeviceArray([0.39682388, 0.7936516 ], dtype=float32)

distb = hv.Distribution(theta_sample[:, 0], 'b').opts(height=125)

distw = hv.Distribution(theta_sample[:, 1], 'w').opts(width=125)

hv.Bivariate(theta_sample, kdims=['b', 'w']).opts(cmap='Blues', line_width=0, filled=True, width=350) << distw << distb

with this the space of possible models is constrained, as shown by the samples of the posterior predictive distribution

predictive = []

for (b, w) in theta_sample:

predictive.append(x_test*w + b)

plots = []

for line in predictive:

plots.append(hv.Curve((x_test, line)))

data = hv.ErrorBars([(2,2,2*seps)])

hv.Overlay(plots).opts(hv.opts.Curve(color='#30a2da', width=500, alpha=0.1)) * data

Let’s assume that we observe a additional data point at \(x=-2\), \(y=-2\)

We will use the previous posterior as prior and update the parameters again

S_theta, mu_theta = update_parameters(S_theta, mu_theta, -2., -2., seps)

display(S_theta, mu_theta)

key, subkey = random.split(key)

theta_sample = dists.MultivariateNormal(mu_theta, S_theta).rsample(key, sample_shape=(100,))

DeviceArray([[ 4.9019602e-01, -2.9072593e-08],

[-2.9072593e-08, 1.2437809e-01]], dtype=float32)

DeviceArray([1.1920929e-07, 9.9502492e-01], dtype=float32)

distb = hv.Distribution(theta_sample[:, 0], 'b').opts(height=125)

distw = hv.Distribution(theta_sample[:, 1], 'w').opts(width=125)

hv.Bivariate(theta_sample, kdims=['b', 'w']).opts(cmap='Blues', line_width=0, filled=True, width=350) << distw << distb

The space of possible models is further reduced

predictive = []

for (b, w) in theta_sample:

predictive.append(x_test*w + b)

plots = []

for line in predictive:

plots.append(hv.Curve((x_test, line)))

data = hv.ErrorBars([(2,2,2*seps), (-2,-2, 2*seps)])

hv.Overlay(plots).opts(hv.opts.Curve(color='#30a2da', width=500, alpha=0.1)) * data

Bayesian solution for the predictions¶

Don’t forget our goal

We want a model to predict \(y\) for unseen examples \(x\) given our training set

We can write this in “bayesian” as \(p(y | x, \mathcal{D})\), the posterior predictive distribution

Previously we looked at samples from this distribution. We can obtain an expression for it by marginalizing \(\theta\) in

note that \(y\) is conditionally independant of \(\mathcal{D}\) given \(\theta\)

For our linear regression

Note

The posterior predictive is Gaussian, because the convolution of gaussians is gaussian

If we consider that \(N\) samples were presented and that \(\mu_0=0\) then

which is the MAP estimator, and

Finally, the variance (uncertainty) for the new \(x\) is

Important

The variance of the prediction has contribution from the noise (irreducible) and the model

Uncertainty grows when we depart from the observed data points

Phi = jnp.ones(shape=(100, 2))

Phi = Phi.at[:, 1].set(x_test)

ftheta = jnp.dot(Phi, mu_theta)

sx = jnp.sqrt(jnp.diag(seps**2 + jnp.dot(jnp.dot(Phi, S_theta), Phi.T)))

mean = hv.Curve((x_test, ftheta)).opts(width=500, color='#30a2da')

data = hv.ErrorBars([(2,2,2*seps), (-2,-2, 2*seps)])

uncertainty = hv.Spread((x_test, ftheta, 2*sx)).opts(color='#30a2da', alpha=0.3)

mean * data * uncertainty

See also

Chapter 18.1 of D. Barber’s book shows the bayesian linear regression where \(\sigma_\epsilon\) is not assummed to be known (normal inverse gamma prior)